The ICE Lab research is supported by NSF Disability and Rehabilitation Engineering (DARE) program, SFSU Ken Fong Translational Research Fund, SFSU Center for Computing for Life Sciences (CCLS) Mini Grant, and CSUPERB Faculty-Student Collaborative Research Grant.

Next-Generation Neural-Machine Interfaces for Electromyography (EMG)-Controlled Neurorehabilitation

While neurorehabilitation system design has progressed remarkably over several decades, no system is currently capable of meeting all desired technical specifications for commercial and clinical implementation. This project takes a computer engineering approach toward improving EMG-based NMI technology functionality and robustness. Software will be developed for managing the sensor status and real-time responses, and novel computing platforms will be implemented to handle the large-scale, data-intensive computations required for responsive neurorehabilitation applications. The project integrates research and education through several avenues: enhancement of undergraduate curricula with embedded research experiences, development of a massive open online course on neural machine interface, and initiation of a K-12 through community college outreach program.

Media:

- Engineering Student Justin Phan Won the 1st Place Award in the CSU Student Research Competition

- Three early-career faculty members win prestigious NSF CAREER grant

- Professors team up to design a better prosthetic arm

Selected publications:

- Ian M. Donovan, Kazunori Okada, and Xiaorong Zhang, “Adjacent Features for High-Density EMG Pattern Recognition”, In proc. of the 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC’18), Honolulu, HI, July 17-21, 2018

- Kattia Chang-Kam, Karina Abad, Ricardo Colin, Charles Tolentino, Cameron Malloy, Alexander David, Amelito G. Enriquez, Wenshen Pong, Zhaoshuo Jiang, Cheng Chen, Kwok-Siong Teh, Hamid Mahmoodi, Hao Jiang, and Xiaorong Zhang, “Engaging Community College Students in Emerging Human-Machine Interfaces Research through Design and Implementation of a Mobile Application for Gesture Recognition”, In proc. of the American Society for Engineering Education Zone IV Conference (ASEE-ZONE IV 2018), Boulder, CO, March 25-27, 2018 (Best Diversity Paper Award)

- Ian M. Donovan, Juris Puchin, Kazunori Okada, and Xiaorong Zhang, “Simple Space-Domain Features for Low-Resolution sEMG Pattern Recognition”, In proc. of the 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC’17), Jeju Island, Korea, July 11-15, 2017.

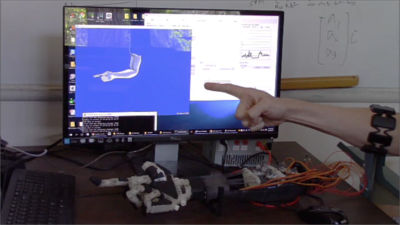

- Ian Donovan, Kevin Valenzuela, Alejandro Ortiz, Sergey Dusheyko, Hao Jiang, Kazunori Okada, and Xiaorong Zhang, “MyoHMI: A Low-Cost and Flexible Platform for Developing Real-Time Human Machine Interface for Myoelectric Controlled Applications”, accepted by the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC 2016), to be held in Budapest, Hungary, October 2016.

A video demonstrating our MyoHMI software controlling a virtual arm and a 3D-printed arm can be viewed here.

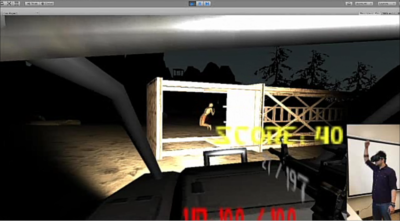

A video demonstrating our MyoHMI software controlling a first-person shooter VR game can be viewed here.

Intelligent Sensor-Empowered mHealth Stroke Rehabilitation System for Resource-Constrained Populations

Stroke is the leading cause of adult disability and the second leading cause of death worldwide. Nearly two-thirds of survivors will suffer from permanent sensorimotor deficits (e.g., hemiparesis, spasticity, impaired motor coordination) that affect their level of independence and quality of life. The impact of stroke is felt more acutely in rural and low socioeconomic areas that struggle with a shortage of rehabilitation professionals and technical resources. Being a collaborative project between the ICE Lab in the School of Engineering and the NeuroTech Lab (Director: Dr. Charmayne Hughes) in the Health Equity Institute at SFSU, this project aims to integrate emerging mobile healthcare (mHealth) and embedded system technologies to develop an intelligent sensor-empowered mHealth stroke rehabilitation system for individuals living in areas without ready access to, or geographic proximity to, rehabilitation services.

Selected publications and presentations:

- Jacob Dinardi, Chloe Gordon-Murer, Selena Sun, Alexander Louie, Moges Baye, Gashaw Jember Belay, Xiaorong Zhang, and Charmayne Hughes, “The outREACH System: Eliminating barriers to stroke rehabilitation in sub-Saharan Africa”, accepted by the IST-Africa 2020, Kampala, Uganda, May 6-8, 2020.

- Charmayne Mary Lee Hughes, Moges Baye, Chloe Danielle Gordon-Murer, Alexander Louie, Selena Sun, Gashaw Jember Belay, and Xiaorong Zhang, “Quantitative Assessment of Upper Limb Motor Function in Ethiopian Acquired Brain Injured Patients using a Low-Cost Wearable Sensor”, Frontiers in Neurology, DOI: 3389/fneur.2019.01323, 2019.

- Charmayne M. L. Hughes, Alexander Louie, Selena Sun, Chloe Gordon-Murer, Gashaw Jember Belay, Moges Baye, and Xiaorong Zhang, “Development of a Post-stroke Upper Limb Rehabilitation Wearable Sensor for Use in Sub-Saharan Arica: A Pilot Validation Study”, Frontiers in Bioengineering and Biotechnology, DOI: 10.3389/fbioe.2019.00322, 2019.

- Chloe Zirbel, Charmayne Hughes, and Xiaorong Zhang, “The VRehab System: A Low-Cost Mobile Virtual Reality System for Post-Stroke Upper Limb Rehabilitation for Medically Underserved Populations”, In proc. of the 2018 IEEE Global Humanitarian Technology Conference (GHTC 2018), San Jose, CA, Oct. 18-21, 2018.

- Chloe Zirbel and Xiaorong Zhang, “Upper Limb Rehabilitation in Virtual Reality for Stroke Survivors”, SFSU Graduate Research and Creative Works Showcase, San Francisco, CA, April 19, 2018. (1st Place Award, area of Engineering and Computer Science (Undergrad-Level), CSU Research Competition)

The figure below shows the setup of a low-cost, mobile VR system called VRehab for stroke rehabilitation.

Toward Anti-Stuttering: Understand the Relation between Stuttering and Anxiety Using Wearable Monitoring, Information Fusion, and Pattern Recognition Methods

Individuals who stutter often suffer from negative emotional reactions, which make the stuttering more severe. One way to investigate the emotional aspects of stuttering is to measure physiological changes. Since people’s emotional reaction is greatly impacted by the environment, the physiological signals collected within a controllable laboratory environment cannot fully reflect realistic conditions. This project aims to use emerging wearable devices to monitor the subjects’ activities and emotions in their daily life, and thus to provide practical data for further analysis. In addition, advanced information fusion and pattern recognition methods will be used to find patterns from the data to understand the relation between stuttering and anxiety.

Poster presentation at 2016 SFSU COSE Project Showcase

Using Smart Wearable Devices for Seismic Measurements and Post-Earthquake Rescue

With the advancement of wearable technologies and internet-of-things (IoT), this project aims to utilize smart wearable devices equipped with various sensors to provide feasible and reliable means to capture substantial ground motions to better serve the needs of earthquake research for future earthquake prediction and developing building codes that act as guidelines for designing safer building structures. Furthermore, the smart wearable device can act as a cyber life-saving straw by providing timely information (e.g. location and health status) of the wearer to assist post-earthquake rescue and help release anxiety by providing real-time health status of the wearer to authorized receivers.

Collaborator: Dr. Zhaoshuo Jiang, Civil Engineering, SFSU